How to Configure a Proxmox VE 4 Multiple Node Cluster

Proxmox VE 4 supports the installation of clusters and the central management of multiple Proxmox servers. You can manage multiple Proxmox servers from one web management console. This feature is really handy when you have a larger server farm.

Proxmox Cluster features:

- Centralized web management.

- Support for multiple authentication methods.

- Ease migration of virtual machines and containers in the cluster.

For more details, please check the Proxmox website.

In this tutorial, we will build a Proxmox 4 cluster with 3 Proxmox servers and 1 NFS Storage server. The Proxmox servers use Debian, the NFS server uses CentOS 7. The NFS storage is used to store ISO files, templates, and the virtual machines.

Prerequisites

- 3 Proxmox server

pve1

IP : 192.168.1.114

FQDN : pve1.myproxmox.co

SSH port: 22

pve2

IP : 192.168.1.115

FQDN : pve2.myproxmox.co

SSH port: 22

pve3

IP : 192.168.1.116

FQDN : pve3.myproxmox.co

SSH port : 22

IP : 192.168.1.114

FQDN : pve1.myproxmox.co

SSH port: 22

pve2

IP : 192.168.1.115

FQDN : pve2.myproxmox.co

SSH port: 22

pve3

IP : 192.168.1.116

FQDN : pve3.myproxmox.co

SSH port : 22

- 1 CentOS 7 server as NFS storage with IP 192.168.1.101

- Date and time mus be synchronized on each Proxmox server.

Step 1 - Configure NFS Storage

In this step, we will add the NFS storage noge for Proxmox and allow multiple Proxmox nodes to read and write on the shared storage.

Log in to the NFS server with ssh:

ssh root@192.168.1.101

Create new new directory that we will share with NFS:

mkdir -p /var/nfsproxmox

Now add all proxmox IP addresses to the NFS configuration file, I'll edit the "exports" file with vim:

vim /etc/exports

Paste configuration below:

/var/nfsproxmox 192.168.1.114(rw,sync,no_root_squash)

/var/nfsproxmox 192.168.1.115(rw,sync,no_root_squash)

/var/nfsproxmox 192.168.1.116(rw,sync,no_root_squash)

Save the file and exit the editor.

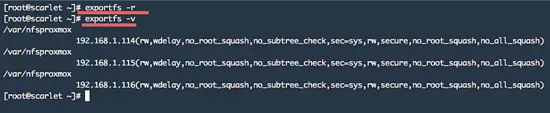

To activate the new configuration, re-export the NFS directory and make sure the shared directory is active:

exportfs -r

exportfs -vStep 2 - Configure Host

The next step is to configure the hosts file on each Proxmox node.

Log into the pve1 server with ssh:

ssh root@192.168.1.114

Now edit the hosts file with vim:

vim /etc/hosts

Make sure pve1 on the file and then add pve2 and pve3 to the hosts file:

192.168.1.115 pve2.myproxmox.co pve2 pvelocalhost

192.168.1.116 pve3.myproxmox.co pve3 pvelocalhost

Save the file and reboot the pve1:

reboot

Next pve2 - login to the server with ssh:

ssh root@192.168.1.115

Edit the hosts file:

vim /etc/hosts

add configuration below:

192.168.1.114 pve1.myproxmox.co pve1 pvelocalhost

192.168.1.116 pve3.myproxmox.co pve3 pvelocalhost

Save the file and reboot:

reboot

Next pve3 - login to pve3 server with ssh:

ssh root@192.168.1.116

edit the hosts file:

vim /etc/hosts

now add configuration below:

192.168.1.114 pve1.myproxmox.co pve1 pvelocalhost

192.168.1.115 pve2.myproxmox.co pve2 pvelocalhost

Save the file and reboot pve3:

rebootStep 3 - Create the cluster on Proxmox server pve1

Before creating the cluster, make sure the date and time are synchronized on all nodes and that the ssh daemon is running on port 22.

Log in to the pve1 server and create the new cluster:

ssh root@192.168.1.114

pvecm create mynode

Result:

Corosync Cluster Engine Authentication key generator.

Gathering 1024 bits for key from /dev/urandom.

Writing corosync key to /etc/corosync/authkey.

The command explained:

pvecm: Proxmox VE cluster manager toolkit

create: Generate new cluster configuration

mynode: cluster name

create: Generate new cluster configuration

mynode: cluster name

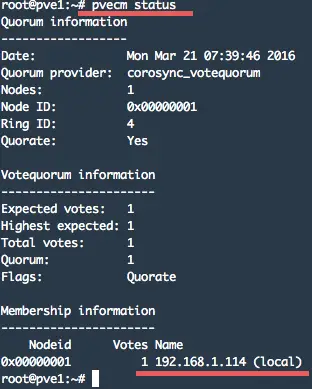

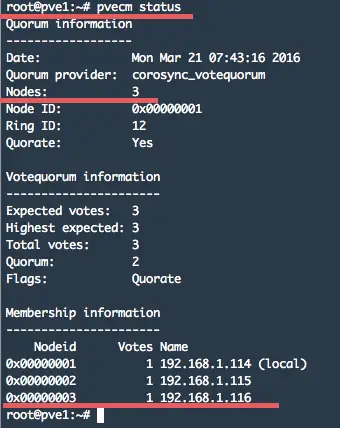

Now check the cluster with command below:

pvecm statusStep 3 - Add pve2 and pve3 to cluster

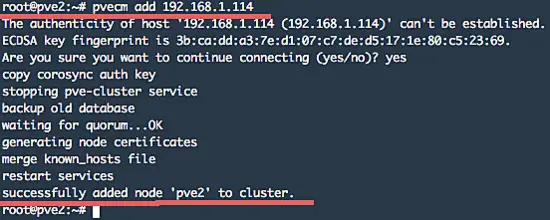

In this step, we will add the Proxmox node pve2 to the cluster. Login to the pve2 server and add to pve1 "mynode" cluster:

ssh root@192.168.1.115

pvecm add 192.168.1.114

add: adding node pve2 to the cluster that we've created on pve1 with IP: 192.168.1.114.

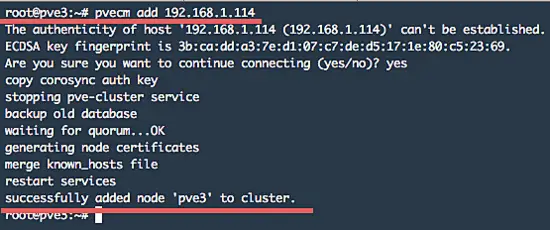

Then add pve3 to the cluster.

ssh root@192.168.1.116

pvecm add 192.168.1.114Step 4 - Check the Proxmox cluster

If the steps above have been executed without an error, check the cluster configuration with:

pvecm status

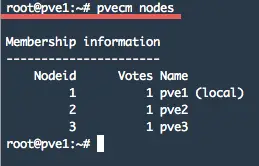

if you want to see the nodes, use the command below:

pvecm nodesStep 5 - Add the NFS share to the Proxmox Cluster

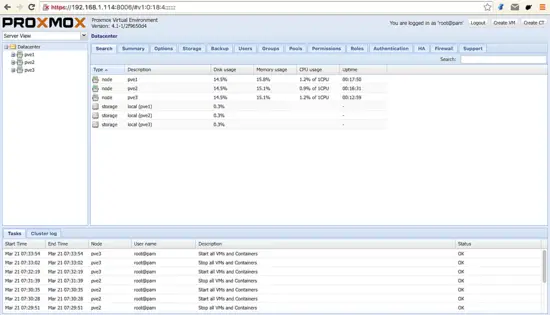

Open Proxmox server pve1 with your browser: https://192.168.1.114:8006/ and log in with your password.

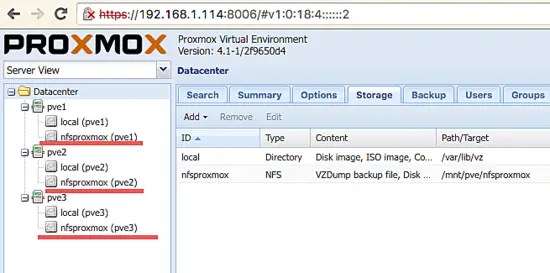

You can see the pve1, pve2 and pve3 server on the left side.

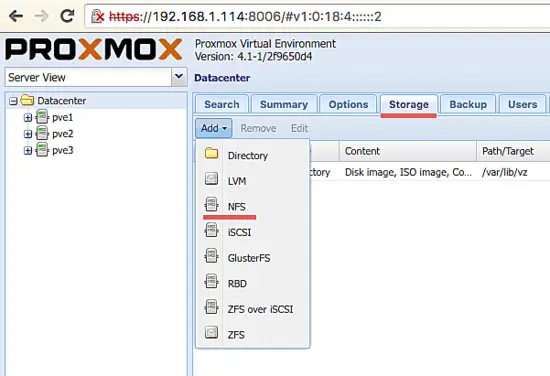

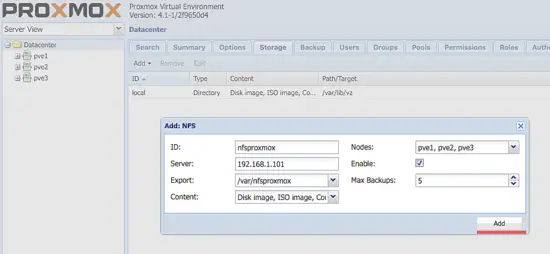

Now go to the tab "Storage" and click "add". Choose the storage type, we use NFS on Centos server.

Fill in the details of the NFS server:

ID: Name of the Storage

Server: IP address of the storage

Export: Detect automatically of the shared directory

Content: Content type on the storage

Nodes: Available on node 1,2 and 3

Backups: Max backups

Server: IP address of the storage

Export: Detect automatically of the shared directory

Content: Content type on the storage

Nodes: Available on node 1,2 and 3

Backups: Max backups

Click add.

And now you can see the NFS storage is available on all Proxmox nodes.

Conclusion

Proxmox VE 4 supports clusters of up to 32 physical nodes. The centralized Proxmox management makes it easy to configure all available nodes from one place. There are many advantages if you use a Proxmox cluster e.g. it's easy to migrate a VM from one node to another node. You can use 2 Proxmox servers for a multi-node setup, but if you want to set up Proxmox for high availability, you need 3 or more Proxmox nodes.

------------------------------------------------------------------------------------------------------------------

ATAU

------------------------------------------------------------------------------------------------------------------

ATAU

Setting Up the NFS Server

Step One—Download the Required Software

Start off by using apt-get to install the nfs programs.yum install nfs-utils nfs-utils-libSubsequently, run several startup scripts for the NFS server:

chkconfig nfs on service rpcbind start service nfs start

Step Two—Export the Shared Directory

ATAUNFS Server and Client Installation on CentOS 7

This guide explains how to configure an NFS server on CentOS 7. Network File System (NFS) is a popular distributed filesystem protocol that enables users to mount remote directories on their server. NFS lets you leverage storage space in a different location and allows you to write onto the same space from multiple servers or clients in an effortless manner. It, thus, works fairly well for directories that users need to access frequently. This tutorial explains the process of mounting an NFS share on a CentOS 7.6 server in simple and easy-to-follow steps.

1 Preliminary Note

I have fresh installed CentOS 7 server, on which I am going to install the NFS server. My CentOS server have hostname server1.example.com and IP as 192.168.0.100

If you don't have a CentOS server installed yet, use this tutorial for the basic operating system installation. Additionally to the server, we need a CentOS 7 client machine, this can be either a server or desktop system. In my case, I will use a CentOS 7 desktop with hostname client1.example.com and IP 192.168.0.101 as a client. I will run all the commands in this tutorial as the root user.

2 At NFS server end

As the first step, we will install these packages on the CentOS server with yum:

yum install nfs-utils

Now create the directory that will be shared by NFS:

mkdir /var/nfsshare

Change the permissions of the folder as follows:

chmod -R 755 /var/nfsshare

chown nfsnobody:nfsnobody /var/nfsshare

We use /var/nfsshare as a shared folder, if we use another drive such as the /home directory, then the permission changes will cause a massive permissions problem and ruin the whole hierarchy. So in case, we want to share the /home directory then permissions must not be changed.

Next, we need to start the services and enable them to be started at boot time.

Next, we need to start the services and enable them to be started at boot time.

systemctl enable rpcbind

systemctl enable nfs-server

systemctl enable nfs-lock

systemctl enable nfs-idmap

systemctl start rpcbind

systemctl start nfs-server

systemctl start nfs-lock

systemctl start nfs-idmap

Now we will share the NFS directory over the network a follows:

nano /etc/exports

We will make two sharing points /home and /var/nfsshare. Edit the exports file as follows:

/var/nfsshare 192.168.0.101(rw,sync,no_root_squash,no_all_squash)

/home 192.168.0.101(rw,sync,no_root_squash,no_all_squash)

Note 192.168.0.101 is the IP of the client machine, if you wish that any other client should access it you need to add it IP wise otherwise you can add "*" instead of IP for all IP access.

Condition is that it must be pingable at both ends.

Finally, start the NFS service:

systemctl restart nfs-server

Again we need to add the NFS service override in CentOS 7 firewall-cmd public zone service as:

firewall-cmd --permanent --zone=public --add-service=nfs

firewall-cmd --permanent --zone=public --add-service=mountd

firewall-cmd --permanent --zone=public --add-service=rpc-bind

firewall-cmd --reload

Note: If it will be not done, then it will give error for Connection Time Out at client side.

Now we are ready with the NFS server part.

3 NFS client end

In my case, I have a CentOS 7 desktop as client. Other CentOS versions will also work the same way. Install the nfs-utild package as follows:

yum install nfs-utils

Now create the NFS directory mount points:

mkdir -p /mnt/nfs/home

mkdir -p /mnt/nfs/var/nfsshare

Next, we will mount the NFS shared home directory in the client machine as shown below:

mount -t nfs 192.168.0.100:/home /mnt/nfs/home/

It will mount /home of NFS server. Next we will mount the /var/nfsshare directory:

mount -t nfs 192.168.0.100:/var/nfsshare /mnt/nfs/var/nfsshare/

Now we are connected with the NFS share, we will crosscheck it as follows:

df -kh[root@client1 ~]# df -kh

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/centos-root 39G 1.1G 38G 3% /

devtmpfs 488M 0 488M 0% /dev

tmpfs 494M 0 494M 0% /dev/shm

tmpfs 494M 6.7M 487M 2% /run

tmpfs 494M 0 494M 0% /sys/fs/cgroup

/dev/mapper/centos-home 19G 33M 19G 1% /home

/dev/sda1 497M 126M 372M 26% /boot

192.168.0.100:/var/nfsshare 39G 980M 38G 3% /mnt/nfs/var/nfsshare

192.168.0.100:/home 19G 33M 19G 1% /mnt/nfs/home

[root@client1 ~]#

So we are connected with the NFS share.

Now we will check the read/write permissions in the shared path. At client enter the command:

touch /mnt/nfs/var/nfsshare/test_nfs

So we successfully configured an NFS-share.

4 Permanent NFS mounting

We have to re-mount the NFS share at the client after every reboot. Here are the steps to mount it permanently by adding the NFS-share in /etc/fstab file of client machine:

nano /etc/fstab

Add the entries like this:Advertisements

[...] 192.168.0.100:/home /mnt/nfs/home nfs defaults 0 0 192.168.0.100:/var/nfsshare /mnt/nfs/var/nfsshare nfs defaults 0 0

Note 192.168.0.100 is the server NFS-share IP address, it will vary in your case.

This will make the permanent mount of the NFS-share. Now you can reboot the machine and mount points will be permanent even after the reboot.

Cheers, now we have a successfully configured NFS-server over CentOS 7 :)

Tidak ada komentar:

Posting Komentar