Pengertian Ceph Storage

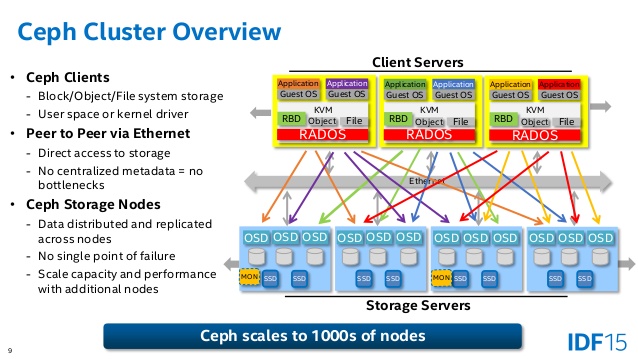

Dari informasi yang ada pada website Cepth dikatakan bahwa Ceph memiliki kemampuan melakukan transformasi infrastruktur IT dan memampukan kita melakukan manajemen data yang besar. Landasan dari Ceph adalah RADOS yang merupakan kependekan dari Reliable Autonomic Distributed Object Storage yang menyediakan storage untuk object, block dan file system dalam sebuah cluster membuat Ceph fleksibel, dapat diandalkan dan mudah dimanaje.

Ceph dengan kemampuan RADOS menyediakan storage dengan scalability yang luar biasa dimana ribuan client atau ribuan KVM mengakses petabytes hingga exabytes data. Masing-masing aplikasi dapat menggunakan object, block atau filesytem pada cluster RADOS yang sama yang berarti kita memiliki kita memiliki storage Ceph yang fleksibel. Kita dapat menggunakan Ceph ini secara gratis menggunakan komoditas hardware yang lebih murah. Ceph merupakan cara baru yang lebih baik dalam menyimpan data.

Cepth storage cluster menggunakan algoritma CRUSH (Controlled Replication Under Scalable Hashing) yang memastikan bahwa data terdistribusi ke seluruh anggota cluster dan semua cluster node dapat menyediakan data tanpa ada bottleneck.

Ceph block storage dapat dijadikan virtual disk pada Linux maupun virtual machine. Teknologi RADOS yang dimiliki Ceph memampukan Ceph melakukan snapshot dan replikasi.

Ceph object storage dapat diakses melalui Amazon S3 (Simple StorageService) dan OpenStack Swift REST (Representational State Transfer).

Dari http://www.slideshare.net/LarryCover/ceph-open-source-storage-software-optimizations-on-intel-architecture-for-cloud-workloads kita mendapatkan gambar seperti berikut ini

Informasi lebih lanjut silahkan mengunjungi

Informasi lebih lanjut silahkan mengunjungi

1. http://ceph.com/ceph-storage/ .

2. http://searchstorage.techtarget.com/definition/Ceph.

Kunjungi www.proweb.co.id untuk menambah wawasan anda.

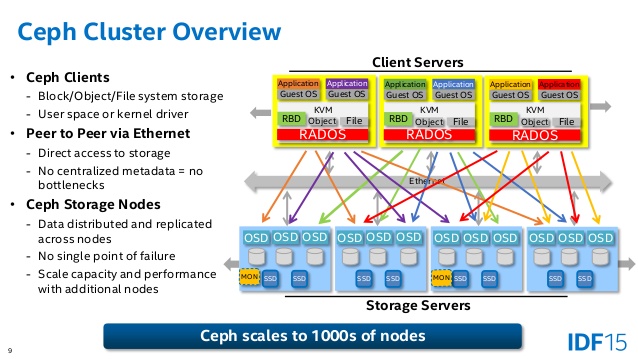

Dari informasi yang ada pada website Cepth dikatakan bahwa Ceph memiliki kemampuan melakukan transformasi infrastruktur IT dan memampukan kita melakukan manajemen data yang besar. Landasan dari Ceph adalah RADOS yang merupakan kependekan dari Reliable Autonomic Distributed Object Storage yang menyediakan storage untuk object, block dan file system dalam sebuah cluster membuat Ceph fleksibel, dapat diandalkan dan mudah dimanaje.

Ceph dengan kemampuan RADOS menyediakan storage dengan scalability yang luar biasa dimana ribuan client atau ribuan KVM mengakses petabytes hingga exabytes data. Masing-masing aplikasi dapat menggunakan object, block atau filesytem pada cluster RADOS yang sama yang berarti kita memiliki kita memiliki storage Ceph yang fleksibel. Kita dapat menggunakan Ceph ini secara gratis menggunakan komoditas hardware yang lebih murah. Ceph merupakan cara baru yang lebih baik dalam menyimpan data.

Cepth storage cluster menggunakan algoritma CRUSH (Controlled Replication Under Scalable Hashing) yang memastikan bahwa data terdistribusi ke seluruh anggota cluster dan semua cluster node dapat menyediakan data tanpa ada bottleneck.

Ceph block storage dapat dijadikan virtual disk pada Linux maupun virtual machine. Teknologi RADOS yang dimiliki Ceph memampukan Ceph melakukan snapshot dan replikasi.

Ceph object storage dapat diakses melalui Amazon S3 (Simple StorageService) dan OpenStack Swift REST (Representational State Transfer).

Dari http://www.slideshare.net/LarryCover/ceph-open-source-storage-software-optimizations-on-intel-architecture-for-cloud-workloads kita mendapatkan gambar seperti berikut ini

Informasi lebih lanjut silahkan mengunjungi

1. http://ceph.com/ceph-storage/ .

2. http://searchstorage.techtarget.com/definition/Ceph.

Kunjungi www.proweb.co.id untuk menambah wawasan anda.

1. http://ceph.com/ceph-storage/ .

2. http://searchstorage.techtarget.com/definition/Ceph.

Kunjungi www.proweb.co.id untuk menambah wawasan anda.

How to build a Ceph Distributed Storage Cluster on CentOS 7

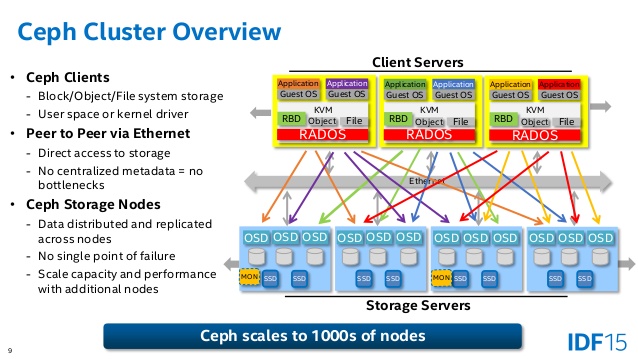

Ceph is a widely used open source storage platform. It provides high performance, reliability, and scalability. The Ceph free distributed storage system provides an interface for object, block, and file-level storage. Ceph is build to provide a distributed storage system without a single point of failure.

In this tutorial, I will guide you to install and build a Ceph cluster on CentOS 7. A Ceph cluster requires these Ceph components:

- Ceph OSDs (ceph-osd) - Handles the data store, data replication and recovery. A Ceph cluster needs at least two Ceph OSD servers. I will use three CentOS 7 OSD servers here.

- Ceph Monitor (ceph-mon) - Monitors the cluster state, OSD map and CRUSH map. I will use one server.

- Ceph Meta Data Server (ceph-mds) - This is needed to use Ceph as a File System.

Prerequisites

- 6 server nodes, all with CentOS 7 installed.

- Root privileges on all nodes.

The servers in this tutorial will use the following hostnames and IP addresses.

hostname IP address

ceph-admin 10.0.15.10

mon1 10.0.15.11

osd1 10.0.15.21

osd2 10.0.15.22

osd3 10.0.15.23

client 10.0.15.15

ceph-admin 10.0.15.10

mon1 10.0.15.11

osd1 10.0.15.21

osd2 10.0.15.22

osd3 10.0.15.23

client 10.0.15.15

All OSD nodes need two partitions, one root (/) partition and an empty partition that is used as Ceph data storage later.

Step 1 - Configure All Nodes

In this step, we will configure all 6 nodes to prepare them for the installation of the Ceph Cluster. You have to follow and run all commands below on all nodes. And make sure ssh-server is installed on all nodes.

Create a Ceph User

Create a new user named 'cephuser' on all nodes.

useradd -d /home/cephuser -m cephuser

passwd cephuser

After creating the new user, we need to configure sudo for 'cephuser'. He must be able to run commands as root and to get root privileges without a password.

Run the command below to create a sudoers file for the user and edit the /etc/sudoers file with sed.

echo "cephuser ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephuser

chmod 0440 /etc/sudoers.d/cephuser

sed -i s'/Defaults requiretty/#Defaults requiretty'/g /etc/sudoersInstall and Configure NTP

Install NTP to synchronize date and time on all nodes. Run the ntpdate command to set a date and time via NTP protocol, we will use the us pool NTP server. Then start and enable NTP server to run at boot time.

yum install -y ntp ntpdate ntp-doc

ntpdate 0.us.pool.ntp.org

hwclock --systohc

systemctl enable ntpd.service

systemctl start ntpd.serviceInstall Open-vm-tools

If you are running all nodes inside VMware, you need to install this virtualization utility. Otherwise skip this step.

yum install -y open-vm-toolsDisable SELinux

Disable SELinux on all nodes by editing the SELinux configuration file with the sed stream editor.

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/configConfigure Hosts File

Edit the /etc/hosts file on all node with the vim editor and add lines with the IP address and hostnames of all cluster nodes.

vim /etc/hosts

Paste the configuration below:

10.0.15.10 ceph-admin

10.0.15.11 mon1

10.0.15.21 osd1

10.0.15.22 osd2

10.0.15.23 osd3

10.0.15.15 client

Save the file and exit vim.

Now you can try to ping between the servers with their hostname to test the network connectivity. Example:

ping -c 5 mon1Step 2 - Configure the SSH Server

In this step, I will configure the ceph-admin node. The admin node is used for configuring the monitor node and the osd nodes. Login to the ceph-admin node and become the 'cephuser'.

ssh root@ceph-admin

su - cephuser

The admin node is used for installing and configuring all cluster nodes, so the user on the ceph-admin node must have privileges to connect to all nodes without a password. We have to configure password-less SSH access for 'cephuser' on 'ceph-admin' node.

Generate the ssh keys for 'cephuser'.

ssh-keygen

leave passphrase blank/empty.

Next, create the configuration file for the ssh configuration.

vim ~/.ssh/config

Paste configuration below:

Host ceph-admin

Hostname ceph-admin

User cephuser

Host mon1

Hostname mon1

User cephuser

Host osd1

Hostname osd1

User cephuser

Host osd2

Hostname osd2

User cephuser

Host osd3

Hostname osd3

User cephuser

Host client

Hostname client

User cephuser

Save the file.

Change the permission of the config file.

chmod 644 ~/.ssh/config

Now add the SSH key to all nodes with the ssh-copy-id command.

ssh-keyscan osd1 osd2 osd3 mon1 client >> ~/.ssh/known_hosts

ssh-copy-id osd1

ssh-copy-id osd2

ssh-copy-id osd3

ssh-copy-id mon1

ssh-copy-id client

Type in your 'cephuser' password when requested.

When you are finished, try to access osd1 server from the ceph-admin node.

ssh osd1Step 3 - Configure Firewalld

We will use Firewalld to protect the system. In this step, we will enable firewald on all nodes, then open the ports needed by ceph-admon, ceph-mon and ceph-osd.

Login to the ceph-admin node and start firewalld.

ssh root@ceph-admin

systemctl start firewalld

systemctl enable firewalld

Open port 80, 2003 and 4505-4506, and then reload the firewall.

sudo firewall-cmd --zone=public --add-port=80/tcp --permanent

sudo firewall-cmd --zone=public --add-port=2003/tcp --permanent

sudo firewall-cmd --zone=public --add-port=4505-4506/tcp --permanent

sudo firewall-cmd --reload

From the ceph-admin node, login to the monitor node 'mon1' and start firewalld.

ssh mon1

sudo systemctl start firewalld

sudo systemctl enable firewalld

Open new port on the Ceph monitor node and reload the firewall.

sudo firewall-cmd --zone=public --add-port=6789/tcp --permanent

sudo firewall-cmd --reload

Finally, open port 6800-7300 on each of the osd nodes - osd1, osd2 and os3.

Login to each osd node from the ceph-admin node.

ssh osd1

sudo systemctl start firewalld

sudo systemctl enable firewalld

Open the ports and reload the firewall.

sudo firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent

sudo firewall-cmd --reload

Firewalld configuration is done.

Step 4 - Configure the Ceph OSD Nodes

In this tutorial, we have 3 OSD nodes and each node has two partitions.

- /dev/sda for the root partition.

- /dev/sdb is an empty partition - 30GB in my case.

We will use /dev/sdb for the Ceph disk. From the ceph-admin node, login to all OSD nodes and format the /dev/sdb partition with XFS.

ssh osd1

ssh osd2

ssh osd3

Check the partition with the fdisk command.

sudo fdisk -l /dev/sdb

Format the /dev/sdb partition with XFS filesystem and with a GPT partition table by using the parted command.

sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100%

sudo mkfs.xfs /dev/sdb -f

Now check the partition, and you will get xfs /dev/sdb partition.

sudo blkid -o value -s TYPE /dev/sdbStep 5 - Build the Ceph Cluster

In this step, we will install Ceph on all nodes from the ceph-admin node.

Login to the ceph-admin node.

ssh root@ceph-admin

su - cephuserInstall ceph-deploy on the ceph-admin node

Add the Ceph repository and install the Ceph deployment tool 'ceph-deploy' with the yum command.

sudo rpm -Uhv http://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-1.el7.noarch.rpm

sudo yum update -y && sudo yum install ceph-deploy -y

Make sure all nodes are updated.

After the ceph-deploy tool has been installed, create a new directory for the ceph cluster configuration.

Create New Cluster Config

Create the new cluster directory.

mkdir cluster

cd cluster/

Next, create a new cluster configuration with the 'ceph-deploy' command, define the monitor node to be 'mon1'.

ceph-deploy new mon1

The command will generate the Ceph cluster configuration file 'ceph.conf' in the cluster directory.

Edit the ceph.conf file with vim.

vim ceph.conf

Under [global] block, paste configuration below.

# Your network address

public network = 10.0.15.0/24

osd pool default size = 2

Save the file and exit vim.

Install Ceph on All Nodes

Now install Ceph on all other nodes from the ceph-admin node. This can be done with a single command.

ceph-deploy install ceph-admin mon1 osd1 osd2 osd3

The command will automatically install Ceph on all nodes: mon1, osd1-3 and ceph-admin - The installation will take some time.

Now deploy the ceph-mon on mon1 node.

ceph-deploy mon create-initial

The command will create the monitor key, check and get the keys with with the 'ceph' command.

ceph-deploy gatherkeys mon1Adding OSDS to the Cluster

When Ceph has been installed on all nodes, then we can add the OSD daemons to the cluster. OSD Daemons will create their data and journal partition on the disk /dev/sdb.

Check that the /dev/sdb partition is available on all OSD nodes.

ceph-deploy disk list osd1 osd2 osd3

You will see the /dev/sdb disk with XFS format.

Next, delete the /dev/sdb partition tables on all nodes with the zap option.

ceph-deploy disk zap osd1:/dev/sdb osd2:/dev/sdb osd3:/dev/sdb

The command will delete all data on /dev/sdb on the Ceph OSD nodes.

Now prepare all OSDS nodes. Make sure there are no errors in the results.

ceph-deploy osd prepare osd1:/dev/sdb osd2:/dev/sdb osd3:/dev/sdb

If you see the osd1-3 is ready for OSD use result, then the deployment was successful.

Activate the OSDs with the command below:

ceph-deploy osd activate osd1:/dev/sdb1 osd2:/dev/sdb1 osd3:/dev/sdb1

Check the output for errors before you proceed. Now you can check the sdb disk on OSD nodes with the list command.

ceph-deploy disk list osd1 osd2 osd3

The results is that /dev/sdb has now two partitions:

- /dev/sdb1 - Ceph Data

- /dev/sdb2 - Ceph Journal

Or you can check that directly on the OSD node with fdisk.

ssh osd1

sudo fdisk -l /dev/sdb

Next, deploy the management-key to all associated nodes.

ceph-deploy admin ceph-admin mon1 osd1 osd2 osd3

Change the permission of the key file by running the command below on all nodes.

sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

The Ceph Cluster on CentOS 7 has been created.

Step 6 - Testing the Ceph setup

In step 4, we've installed and created our new Ceph cluster, then we added OSDS nodes to the cluster. Now we can test the cluster and make sure there are no errors in the cluster setup.

From the ceph-admin node, log in to the ceph monitor server 'mon1'.

ssh mon1

Run the command below to check the cluster health.

sudo ceph health

Now check the cluster status.

sudo ceph -s

And you should see the results below:

Make sure Ceph health is OK and there is a monitor node 'mon1' with IP address '10.0.15.11'. There should be 3 OSD servers and all should be up and running, and there should be an available disk of about 75GB - 3x25GB Ceph Data partition.

Congratulation, you've build a new Ceph Cluster successfully.

In the next part of the Ceph tutorial, I will show you how to use Ceph as a Block Device or mount it as a FileSystem.

Tidak ada komentar:

Posting Komentar