Deploy Kubernetes On-Premises Using CentOS 7 — Part 1

This rephrased version is clearer and more grammatically accurate. It conveys the idea that you are deploying Kubernetes on an on-premises (local) server using the CentOS 7 operating system.

Annotation

I’ve been wanting to have a playground to mess around with Kubernetes (k8s) deployments for a while and didn’t want to spend the money on a cloud solution like AWS Elastic Container Service for Kubernetes or Google Kubernetes Engine. While these hosted solutions provide additional features such as the ability to spin up a load balancer, they also cost money every hour they’re available and I’m planning on leaving my cluster running. Also, from a learning perspective, there is no greater way to learn the underpinnings of a solution than having to deploy and manage it on your own.

Therefore, I set out to deploy Kubernetes (k8s) in my ESXi home lab on CentOS 7 virtual machines using kubeadm. You can also set up Kubernetes in your preferred environment, such as:

• Microsoft Hyper-V

• KVM

• Xen

• Proxmox

• VirtualBox

• VMware Workstation

I got off track a few times and thought another blog post with step by step instructions and screenshots would help others.

I trust it will be beneficial to you. Let’s get started.

Requirements

This post will walk through the deployment of Kubernetes version 1.28.2 (latest as of this posting) on three CentOS 7 virtual machines. One virtual machine will be the Kubernetes master server where the control plane components will be run and two additional nodes where the containers themselves will be scheduled. To begin the setup, deploy three CentOS 7 servers into your environment and be sure that the master node has 2 CPUs and all three servers have at least 2 GB of RAM on them. Remember the nodes will run your containers so the amount of RAM you need on those is dependent upon your workloads.

Also, I’m logging in as root on each of these nodes to perform the commands. Change this as you need if you need to secure your environment more than I have in my home lab.

CentOS7 Installation

Install CentOS7 on your preferred environment by following the steps provided in the ( https://www.centos.org/download/ ) ISO file installation process. The method you choose to perform the installation doesn’t matter: what’s important is to have a fully functional CentOS 7. You also have the option of using cloud and container images for your implementation ( https://cloud.centos.org/centos/ ).

The choice is yours.

You can refer to the screenshots below to see how I completed the clear installation in my case using ISO file

After completing the installation, reboot the system to prepare and use CentOS 7

Let’s attempt to view the IP address on our new instance. As we can observe, there is no IP address present.

This occurs because, by default, DHCP is disabled on a fresh CentOS installation. To enable the DHCP client, please execute the command below and edit your interface configuration file.

Open the configuration file for your network interface. (replace ‘ens192’ with your specific interface name.)

vi /etc/sysconfig/network-scripts/ifcfg-ens192

Add the following settings. If a configuration already exists, modify it to look like the following:

DEVICE=ens192

ONBOOT=yes

DHCP=yesSave your changes and exit

Your new settings will not apply until the network interface is restarted. Restarting the network interface

ifdown ens192

ifup ens192As you can see, after restarting the interface, we have already obtained an IP address (10.0.0.14) from the DHCP server in my environment. If you don’t have a DHCP server in your environment, you can assign IP addresses manually.

I’m going to update the hostname of my master node to ‘kube-master.local’

First, lets verify the current hostname:

hostnamectlNext, we’ll change the hostname to ‘kube-master.local’ with the following command:

hostnamectl set-hostname kube-master.localAfter running this command, you’ll notice that the hostname has been successfully changed to ‘kube-master.local’

Don’t forget to add your hostname to the hosts file as well. To do this, navigate to the /etc directory and edit the ‘hosts’ file, adding your hostname at the end of the 127.0.0.1 line.

Save your changes and exit

Prepare Each of the Servers for K8s

On all three (or more if you chose to do more nodes) of the servers we’ll need to get the OS setup to be ready to handle our kubernetes deployment via kubeadm. Let’s start with stopping and disabling firewall by running the commands on each of the servers:

systemctl stop firewalld

systemctl disable firewalld“If you don’t want to disable the firewall, you should be aware of the ports and protocols used by the Kubernetes control plane. These ports must be allowed to enable the cluster to function.

The following TCP ports are used by Kubernetes control plane components:

Port 6443 — Kubernetes API server

Ports 2379–2380 — etcd server client API

Port 10250 — Kubelet API

Port 10259 — kube-scheduler

Port 10257 — kube-controller manager

The following TCP ports are used by Kubernetes nodes:

Port 10250 — Kubelet API

Ports 30000–32767 — NodePort Services

These are the default ports defined by Kubernetes. If you have set custom ports for any of them, the firewall should be enabled for the custom port.”

Next, let’s disable swap. Kubeadm will check to make sure that swap is disabled when we run it, so lets turn swap off and disable it for future reboots.

Disabling swap ensures stable and predictable performance in your Kubernetes cluster. Kubernetes workloads are designed to operate within allocated memory, and disabling swap enforces these constraints and prevents unexpected issues from memory swapping.

swapoff -a

sed -i.bak -r 's/(.+ swap .+)/#\1/' /etc/fstabNow we’ll need to disable SELinux if we have that enabled on our servers. I’m leaving it on, but setting it to Permissive mode.

Disabling SELinux during kubeadm installation is recommended because SELinux (Security-Enhanced Linux) can introduce additional complexity and potentially prevent the smooth operation of a Kubernetes cluster.

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/configNext, we will configure the Kubernetes repository to use the package manager (yum) for installing the latest Kubernetes version. To do this, we will create a file named ‘kubernetes.repo’ in the ‘/etc/yum.repos.d’ directory.

Navigate to the /etc/yum.repos.d directory:

cd /etc/yum.repos.dCreate the ‘kubernetes.repo’ file:

touch kubernetes.repoNow, edit this ‘kubernetes.repo’ file and add the following content:

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

exclude=kube*Save file and exit

Now before we’ve updated our repos, lets install some of the tools we’ll need on our servers including docker, kubeadm, kubectl and kubelet.

=================================================================

Docker Engine on CentOS will be installed according to the official documentation by following the prescribed steps provided at (https://docs.docker.com/engine/install/centos/).

Before you install Docker Engine for the first time on a new host machine, you need to set up the Docker repository. Afterward, you can install and update Docker from the repository.

Install the yum-utils package (which provides the yum-config-manager utility) and set up the repository.

sudo yum install -y yum-utils

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repoTo install the latest version, run:

sudo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-pluginIf prompted to accept the GPG key, verify that the fingerprint matches

060A 61C5 1B55 8A7F 742B 77AA C52F EB6B 621E 9F35, and if so, accept it.

This command installs Docker, but it doesn’t start Docker. It also creates a docker group, however, it doesn’t add any users to the group by default.

Start Docker.

sudo systemctl start dockerVerify that the Docker Engine is running.

sudo service docker status

To install kubeadm, kubectl and kubelet together, execute the following command:

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetesAfter installing docker and our kubernetes tools, we’ll need to enable the services so that they persist across reboots, and start the services so we can use them right away.

systemctl enable docker && systemctl start docker

systemctl enable kubelet && systemctl start kubeletBefore initiating our Kubernetes cluster setup, it’s essential to enable iptables filtering to ensure that proxying functions correctly. To achieve this, execute the following command to enable filtering and make sure that this configuration persists across reboots.

Navigate to the /etc/sysctl.d/ directory:

cd /etc/sysctl.dCreate the ‘k8s.conf’ file:

touch k8s.confNow, edit this ‘k8s.conf’ file and add the following content:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1Save file and exit

Apply kernel parameter by:

sysctl --systemThe sysctl — system is a command used to apply kernel parameter settings specified in configuration files, ensuring that these settings take effect at the system level and survive system reboots. It’s a way to manage and configure kernel parameters on a Linux system.

Relax

The previous lengthy chapters represents a significant phase in the configuration process. We need to execute this step on all machines designated to participate in the Kubernetes cluster. As previously mentioned, we will have three CentOS machines (nodes) with identical configurations, all residing on the same network: one serving as the master node and two as worker nodes. The challenge lies in manual scaling; you would have to replicate these steps manually on all machines up to this point. Alternatively, if you possess the requisite skills, you can create identical virtual machine copies and only need to adjust the hostnames. This is due to the fact that, as you may recall, the hostname of the current machine was initially set manually by us as kube-master.local

In our case we will create three nodes with below identification. They are on same network and have different IP addresses.

“In our case, we will create three nodes with the following identities. They are on the same network but have different IP addresses.

kube-master.local with an IP address of 10.0.0.14 (already created)

kube-node1.local with an IP address of 10.0.0.16 (need to create this one)

kube-node2.local with an IP address of 10.0.0.17 (need to create this one)

If you decide that two worker nodes are not enough for you, you should repeat these actions for all nodes. But remember, you can add new worker nodes to your Kubernetes cluster later, even if you initially set up the cluster with only two worker nodes. Kubernetes is designed to be scalable, and one of its key features is the ability to easily scale your cluster by adding or removing nodes as needed.

Initialize Kubernetes Cluster on Master Node

We’re about ready to initialize our kubernetes cluster but I wanted to take a second to mention that we’ll be using Flannel as the network plugin to enable our pods to communicate with one another. You’re free to use other network plugins such as Calico or Cillium but this post focuses on the Flannel plugin specifically.

Flannel is a popular Container Network Interface (CNI) plugin used in Kubernetes to provide networking capabilities for containerized applications. Its primary function is to create a virtual network that allows containers to communicate with each other, both within the same node and across different nodes in a Kubernetes cluster.

Let’s run kubeadm init on our master node with the –pod-network switch needed for Flannel to work properly.

kubeadm init --pod-network-cidr=10.244.0.0/16When you run the init command several pre-flight checks will take place including making sure swap is off and iptables filtering is enabled.

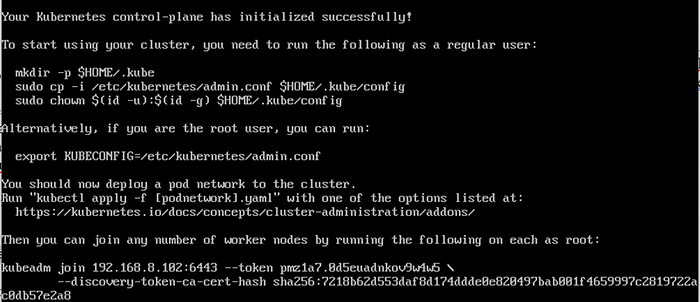

After the initialization is complete you should have a working kubernetes master node setup. The last few lines output from the kubeadm init command are important to take note of since it shows the commands to run on the worker nodes to join the cluster.

Attention!!!

During the pre-flight check, an error may appear that needs to be corrected before initialization. In my case, you may encounter the error message “… the container runtime is not running…”.

This issue can be resolved by removing the config.toml file and restart service.

sudo rm /etc/containerd/config.toml

sudo systemctl restart containerdAfter a successful initialization, you will receive a join command that needs to be executed on worker nodes that are part of the cluster. In the screenshot below, you can find an example of the join link-command:

The Kubernetes cluster join command with a token is typically used to join a node to a Kubernetes cluster, and the token it provides is only valid for a limited period of time. The exact duration for which the token is valid can vary depending on how your cluster is configured, but it is usually set to a reasonable default value to ensure security.

By default, the token is typically valid for 24 hours.

After 24 hours you should regenerate join command again

kubeadm token create --print-join-commandOr you can specify time to live during first generation (example: valid for 7 day)

kubeadm token create --ttl 7dAlso, you can check all tokens.

Before we start working on the other nodes, let’s make sure we can run the kubectl commands from the master node, in case we want to use it later on.

Sets an environment variable called KUBECONFIG to point to the path of a Kubernetes configuration file, which is typically named admin.conf in this case. The KUBECONFIG environment variable is used by various Kubernetes command-line tools (e.g., kubectl) to determine the configuration and context to use when interacting with a Kubernetes cluster.

export KUBECONFIG=/etc/kubernetes/admin.confAfter setting our KUBECONFIG variable, we can run kubectl get nodes just to verify that the cluster is serving requests to the API. You can see that I have 1 node of type master setup now.

kubectl get nodes

Configure Worker Nodes

Now that the master is setup, lets focus on joining our two-member nodes to the cluster and setup the networking. To begin, lets join the nodes to the cluster using the command that was output from the kubeadm init command we ran on the master. This is the command that I used, but your cluster will have a different value. Please use your own and not the one shown below as an example.

kubeadm join 10.0.0.14:6443 --token c67hsz.br20l59z9oampi2w --discovery-token-ca-cert-hash sha256:481bcd1d8da24109a733bec69dbb09e1fa55c276616b5e79e7e69a3471facec5Once you run the command on all of your nodes you should get a success message that lets you know that you’ve joined the cluster successfully.

Perform the same procedure on the other worker nodes to add them to the cluster as well

At this point I’m going to copy the admin.conf file over to my worker nodes and set KUBECONFIG to use it for authentication so that I can run kubectl commands on the worker nodes as well. This is step is optional if you are going to run kubectl commands elsewhere, like from your desktop computer.

The IP address of my master node is 10.0.0.14.

scp root@10.0.0.14:/etc/kubernetes/admin.conf /etc/kubernetes/admin.confexport KUBECONFIG=/etc/kubernetes/admin.confNow if you run the kubectl get nodes command again, we should see that there are three nodes in our cluster.

If you’re closely paying attention you’ll notice that the nodes show a status of “NotReady” which is a concern. This is because we haven’t finished deploying our network components for the Flannel plugin. To do that we need to deploy the flannel containers in our cluster by running: (https://github.com/flannel-io/flannel)

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.ymlGive your cluster a second and re-run the kubectl get nodes command. You should see the nodes are all in a Ready status now.

Finally, we will achieve a fully functional Kubernetes cluster consisting of one master and two worker nodes.

kubectl cluster-info

Our on-premise Kubernetes cluster is fully configured, and we are ready to utilize the powerful capabilities of Kubernetes!

Tidak ada komentar:

Posting Komentar